Understanding Edge Servers: What They Are and How They Work

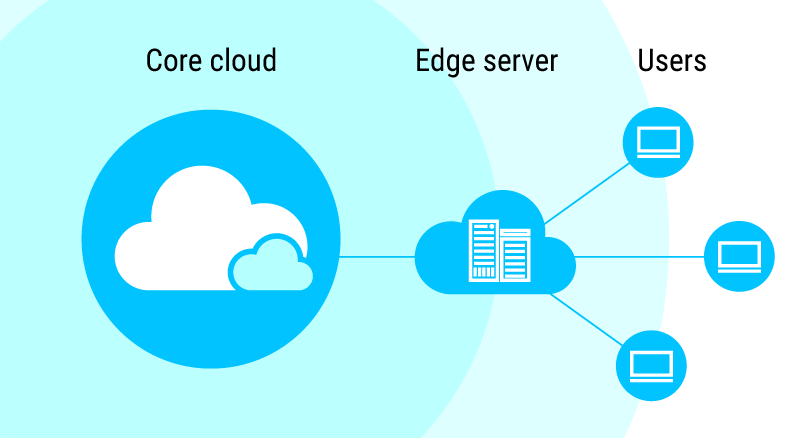

Edge servers, also known as edge computing servers, are a type of server that is designed to bring computing power closer to the end user. They are located in geographically dispersed locations, typically in close proximity to where the end users are located, and are used to process data and perform tasks that would normally be handled by centralized data centers or cloud servers.

The main goal of edge servers is to reduce latency and improve the overall user experience by processing data locally rather than sending it to a centralized location for processing. This can be particularly important in applications that require real-time processing, such as video streaming, online gaming, and autonomous vehicles.

Edge servers typically run on lightweight hardware and software, and they are often deployed in a distributed architecture to enable rapid deployment and scaling. They also typically have built-in redundancy and failover capabilities to ensure high availability and reliability.

In addition to improving performance and reducing latency, edge servers can also help reduce network congestion and lower bandwidth costs by processing data locally rather than sending it over long distances. This can be particularly important in areas with limited network bandwidth or high network traffic.

Overall, edge servers are an important component of modern computing infrastructure, enabling faster and more reliable data processing and delivering better user experiences.